Purpose:

This article reviews how an interrupt is handled by Qemu.

Background:

My conjecture is that do_interrupt is called whenever an interrupt needs to be passed to the guest OS. By analyzing do_interrupt, we will be able to view who is triggering the interrupt and what part of the code is handling the interrupt.

Steps:

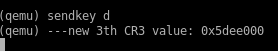

1. Debug into Qemu and set a breakpoint at do_interrupt.

This is what the do_interrupt code looks like (target-i386/seg_helper.c)

2. Step over each line until you hit do_interrupt_all. Step into do_interrupt_all.

NOTE: you may have to repeat the step command several times because the parameter list of the function spreads over several lines

It is important to notice the interrupt number (intno) in the parameter list. The interrupt number will allow us to see exactly what interrupt is causing the call to do_interrupt

Interrupt 14 is a page fault.

3. Now, lets see who is generating this interrupt. We will do this by viewing the EIP maintained by Qemu.

This address is wrong because the memory of the guest OS is not directly loaded into the memory of the host machine. To find the address true value of EIP, we will have to set a breakpoint in helper_trace2 so that we can view the actual code that is triggering the interrupt.

Above is the address of the assembly code causing the interrupt. Now we can view the actual assembly by using a function added to the Qemu system.

Now that we know what part of the assembly code is generating the interrupt, we now need to determine what part of the assembly code is handling the interrupt.

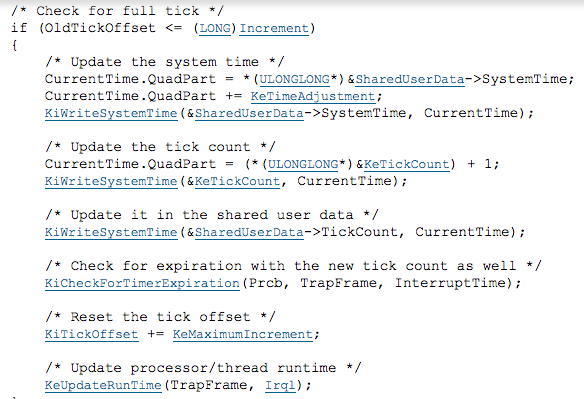

4. We need to find a piece of Qemu code that is emulating the guest's Interrupt Descriptor Table. This table contains a mapping for each interrupt that tells it where to jump to. Continue stepping through do_interrupt_all until you hit another version of do_interrupt. In our case, it will be do_interrupt_protected. Step into it.

Soon after you step into it, GDB hits a line that pertains to the interrupt descriptor table.

The following two lines of code are important to how the address of the handler is found.

Every entry in the interrupt descriptor table is 2 bytes. Line 607 uses the interrupt number as an index along with some offset to determine where in the table to go. ptr on line 610 will point to the corresponding entry for the interrupt. We cannot use ptr to read the guest OS's memory because it is pointing to virtual memory. First, we must step through the code some more because the remaining code will use the information in the interrupt descriptor table to determine to next address of EIP. We will extract the handler's address by viewing the EIP once it is updated.

5. Step through do_interrupt_protected until env->eip receives a new value.

The value of offset will be the address of the handler of the interrupt.

6. Once again, let's view the associated assembly.

NOTE: you may have to repeat the step command several times because the parameter list of the function spreads over several lines

It is important to notice the interrupt number (intno) in the parameter list. The interrupt number will allow us to see exactly what interrupt is causing the call to do_interrupt

Interrupt 14 is a page fault.

3. Now, lets see who is generating this interrupt. We will do this by viewing the EIP maintained by Qemu.

This address is wrong because the memory of the guest OS is not directly loaded into the memory of the host machine. To find the address true value of EIP, we will have to set a breakpoint in helper_trace2 so that we can view the actual code that is triggering the interrupt.

Above is the address of the assembly code causing the interrupt. Now we can view the actual assembly by using a function added to the Qemu system.

4. We need to find a piece of Qemu code that is emulating the guest's Interrupt Descriptor Table. This table contains a mapping for each interrupt that tells it where to jump to. Continue stepping through do_interrupt_all until you hit another version of do_interrupt. In our case, it will be do_interrupt_protected. Step into it.

Soon after you step into it, GDB hits a line that pertains to the interrupt descriptor table.

The following two lines of code are important to how the address of the handler is found.

Every entry in the interrupt descriptor table is 2 bytes. Line 607 uses the interrupt number as an index along with some offset to determine where in the table to go. ptr on line 610 will point to the corresponding entry for the interrupt. We cannot use ptr to read the guest OS's memory because it is pointing to virtual memory. First, we must step through the code some more because the remaining code will use the information in the interrupt descriptor table to determine to next address of EIP. We will extract the handler's address by viewing the EIP once it is updated.

5. Step through do_interrupt_protected until env->eip receives a new value.

The value of offset will be the address of the handler of the interrupt.

6. Once again, let's view the associated assembly.

Summary:

The CPU will now jump to the handler code and proceed with carrying out the interrupt.